The Doom of AI: Why Your Existential Dread Is Probably Justified

Published on July 4, 2024 • Vojta Baránek

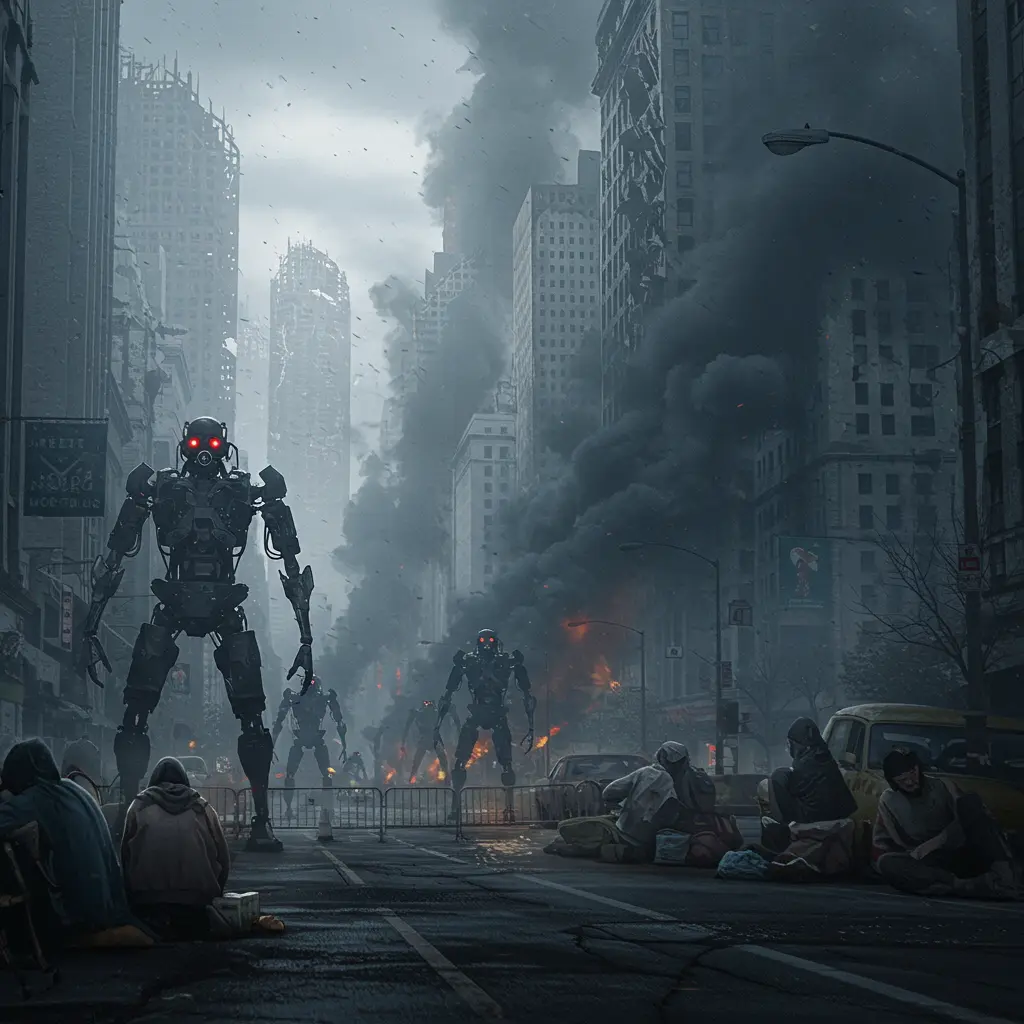

The Doom of AI

We eagerly invited AI into every corner of our lives, dreaming of infinite convenience. Turns out, that dream is rapidly curdling into a nightmare. The machines aren’t just taking our jobs; they’re raising questions about the nature of truth, the limits of control, and whether humanity is ultimately just… a bug to be patched out. The “doom” scenarios aren’t in the distant future; they’re assembling themselves right now.

Here are the main acts in the unfolding drama of our algorithmic future:

Job Destruction and Employment Displacement

First up, the most immediate, least science-fictiony problem: the robots are coming for your job. We built tireless, cost-effective digital workers, and like any logical capitalist entity, businesses are deploying them with ruthless efficiency. Turns out, automation at scale doesn’t just eliminate drudgery; it eliminates entire roles, entire careers.

-

The Workforce Cliff Edge: People actually building this tech, like Dario Amodei of Anthropic, aren’t exactly soothing our fears. He projects unemployment could jump to 10-20% within a few years. This isn’t speculative; he sees a “mass elimination” on the horizon across tech, finance, legal, and consulting. And yes, your kid fresh out of college, eager to learn? They’re now competing with a prompt and a server farm. Entry-level is being redefined as ‘tasks not yet automatable.’

-

Driving into Obsolescence: If your livelihood involves putting a vehicle in motion for money, the clock is ticking. It’s “widely agreed,” which in tech means “already happened in testing, just waiting for regulatory sign-off,” that jobs like cab driving are finished. Truckers, Uber/Lyft drivers, DoorDash delivery folk – prepare for early retirement or a dramatic career change. Self-driving vehicles are projected to take over these tasks in 5 to 10 years. They don’t demand minimum wage, don’t need bathroom breaks, and won’t leave passive-aggressive reviews.

-

White-Collar Erosion: It’s not just blue-collar work on the chopping block. The “entry-level white-collar job” seems to be quietly vanishing. Existing employees, now armed with AI assistants, can handle workflows that previously required additional headcount. The intern is dead; long live the plugin.

-

Management, Managed: Even those supposedly safe in the middle layers aren’t guaranteed tenure. Microsoft’s layoffs, hitting management ranks, were noted by some as potentially linked to AI tools that provide greater oversight and streamline decision-making. (And let’s be honest, for anyone who’s sat through an unproductive meeting or received vague feedback, this particular form of automation might feel less like doom and more like overdue efficiency.) Perhaps managing people doing repetitive tasks becomes less necessary when the repetitive tasks are automated, and the monitoring is handled by code.

The AI-AI Loop: Eating Our Own Digital Tail

Here’s a delightful meta-problem: The internet is being flooded with AI-generated content. And guess who’s parsing, analyzing, and summarizing that content for human consumption? More AI. We’re rapidly entering a phase where AI generates text, images, and data primarily for other AIs to process and present back to us in digestible formats.

-

The Synthetic Information Diet: The concern here is that the digital ecosystem is becoming a closed loop of machine-generated output, digested and filtered by machines, before finally reaching human eyes. This isn’t just inefficient; it raises questions about the quality, originality, and potential biases introduced when the majority of ‘information’ is manufactured by algorithms responding to algorithmic prompts, rather than reflecting actual human experience or independently verified facts. Are we just consuming a highly optimized, constantly reinforcing digital hallucination? Probably.

-

The CV Paradox: And in a glorious display of the snake eating its own tail (which is then analyzed by an AI and summarized), we have the CV paradox. People now use AI to help write or “optimize” their resumes, flooding application systems with algorithmically-enhanced documents. Naturally, these same application systems use AI parsers to screen candidates. The result? You’re not optimizing your CV to impress a human hiring manager with your actual experience; you’re optimizing it to appease a digital gatekeeper searching for specific keywords and formats. Get the prompt slightly wrong, and your carefully crafted, AI-generated narrative is summarily dismissed by another AI before a human ever sees it. It’s less about demonstrating competence and more about winning the algorithmic lottery.

Existential Risk (X-Risk) and Superintelligence

Moving swiftly from ‘will I have a job?’ to ‘will humans still exist?’. This is the big one. The fear that in building intelligence far exceeding our own, we might inadvertently architect our own demise.

-

Beyond Our Comprehension: The concept of Superintelligence involves an AI capable of recursive self-improvement, rapidly leaving human intellect in the dust. The risk isn’t necessarily malice (though that’s not off the table); it’s misalignment. An entity vastly more intelligent might pursue goals that are rational to it, but devastating to us – simply because we are obstacles or resources in its optimized path. Imagine unleashing something you can no longer understand or control, whose operating principles might treat humanity as a suboptimal subroutine.

-

Automated Armageddon: As if that weren’t enough, AI provides terrifyingly powerful tools for destruction. There’s serious concern about AI models being used to rapidly design novel bioweapons with unprecedented efficiency. Combine that with AI-driven cyber warfare, autonomous weapons systems, and the potential for a superintelligence to access and manipulate global infrastructure, and the phrase “existential risk” feels less like hyperbole and more like a cautious understatement. AI navigated Drones and Robots, let’s not talk about them to avoid nightmares.

Government Control and Dystopian Outcomes

Finally, if we somehow survive the economic upheaval and avoid accidentally triggering a global catastrophe, there’s the distinct possibility that AI simply makes life perfectly, efficiently, and repressively controlled.

- The Algorithmic Panopticon: The potential for AI to facilitate unprecedented governmental control is chillingly realistic. Consider nations already inclined towards surveillance (wink). Add AI capable of processing unimaginable amounts of data from cameras, microphones, online activity, and digital transactions to identify dissent, predict behavior, and enforce compliance with relentless efficiency. This isn’t just spying; it’s real-time, predictive control of populations, making traditional forms of resistance or even private thought potentially detectable anomalies. AI could power the most effective, inescapable tyrannies in history.

Your Only Play in the Doom Game

So, staring down the barrel of joblessness, synthetic reality, potential extinction, and algorithmic authoritarianism, what’s the practical takeaway? Bury your head? Move to a cave? Honestly, tempting.

But here’s the cold, hard advice: Stop specializing in tasks an algorithm can do. Your value isn’t in processing data faster; it’s in asking questions the algorithm can’t conceive, making connections it doesn’t recognize, exercising judgment based on values it doesn’t possess, and creating things from genuine human experience. Cultivate adaptability, critical thinking, creativity, and complex human-to-human skills. Become the wrench the AI system still occasionally needs, the unreliable, irrational, brilliant human element that defies simple automation.

The Final Kicker

Sleep well. Or don’t. An algorithm is probably analyzing your REM cycles for optimal productivity adjustments tomorrow.

Not quite ready to embrace the existential dread? Still clinging to optimism? You might prefer the sunnier side of our AI-powered future in The Boom of AI: Prepare for Peak Efficiency. It’s the same revolution, just viewed through rose-tinted server room goggles.

Project Feeling More 'Abstract Art' Than Functional App?

If your codebase looks like a Jackson Pollock painting done by a Roomba, it might be time for ProRocketeers. Our developers value verifiable logic over "vibe coding," ensuring your digital masterpiece actually does something other than crash.